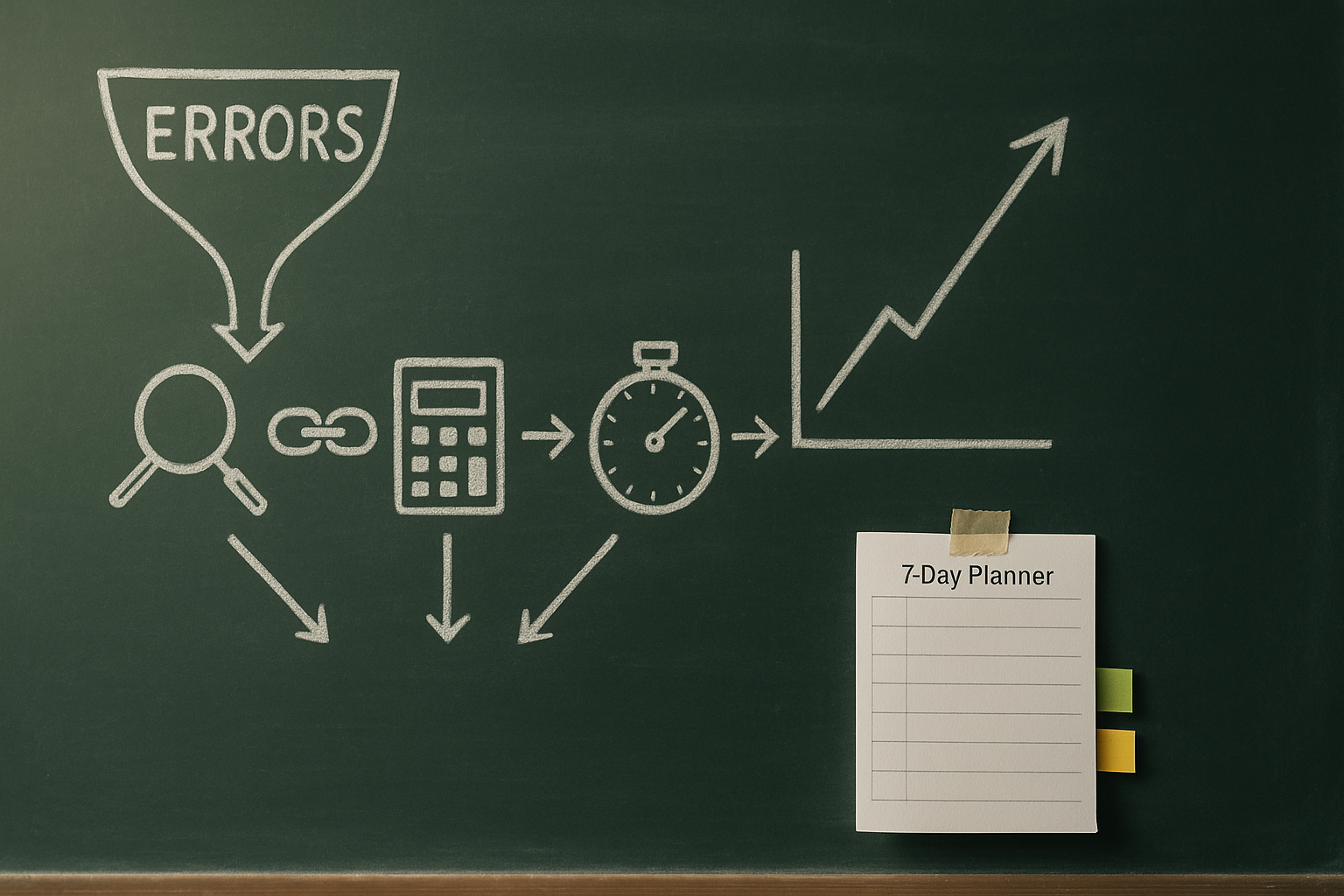

What this delivers: a 7-day schedule that mines your last two NBMEs and recent UWorld blocks for repeatable error types, prescribes targeted micro-drills, and verifies gains with structured retests. Expect fewer careless errors, tighter reading, and cleaner mechanistic reasoning by Day 7.

Define the Plateau, Calibrate the Target, and Choose the Right Inputs

Before you can break a plateau, you must define it precisely. In Step 1 prep, a plateau is a stable range of NBME predicted scores across at least two forms (e.g., Form A and Form B within 10 days) where your block-level correctness oscillates randomly but the mean does not climb. Our goal for a seven-day intervention is a measurable, error-type–specific effect size: a ≥10–12 percentage-point improvement in the worst two error categories and a 2–4 point uptick in overall NBME predicted score. You will feed the program with three inputs: (1) your two most recent NBME forms (full review), (2) the last four timed, random UWorld blocks (40q each), and (3) your content anchors (class notes/First Aid/Pathoma/Sketchy references) to patch essential gaps.

Why error types instead of topics? Topics are infinite; error types are finite and repeatable. Most NBME misses fall into a short list—knowledge gaps, misreading or overlooking a clause, distractor susceptibility, premature closure/anchoring, computation/units slip, multi-step mechanistic chain break, graph/data interpretation error, or time-pressure gambles. This intervention prioritizes cross-topic skills that pay off across organ systems and task types (pathophysiology, pharmacology, interpretation).

Calibrate your target with a baseline snapshot. Compute: (a) mean % correct per NBME (overall and per block), (b) the distribution of misses by error type, and (c) a “floor block” metric—the worst block across your last two NBMEs. The program aims to raise the floor first, because improving the worst block yields outsized risk control on test day. Establish a fixed time budget—typically 3.5–4.5 hours/day for seven days—split among micro-drills (skill building), targeted content patches (only what is required to fix a pattern), and verification sets (timed questions that test the fix). Choose two NBME forms for post-hoc analysis (yesterday backward) and reserve one fresh form for Day 7 verification if available. If not, use a two-block mixed-subject retest (80 novel items) drawn from a different Q-bank section you have not recently cycled. Clarity up front drives disciplined execution and prevents scope creep.

Standardize Your Error Taxonomy to Turn Chaos into a Short List

Ad hoc labels (“careless,” “dumb mistake”) don’t generalize. A standardized taxonomy pinpoints why an answer was missed and maps it to a correction you can drill. Use the following working set across NBMEs and UWorld. Every miss gets exactly one primary error type (choose the earliest root cause in the chain) and, optionally, a secondary tag if two independent failures occurred.

| Error type | Operational definition | Red flags in the stem/options | Primary intervention |

| Knowledge gap | Information needed was never learned or is unretrievable in time. | Pauses at basic recall, absent linkage terms (“therefore,” “because”). | Micro-fact sheet + retrieval practice (2×24h spaced), anchor example. |

| Misread/overlook | Ignored/altered a key constraint (age, timing, lab unit, “except”). | Missed modifiers, negations, units, ranges; changed numbers mentally. | Line-by-line readback + “constraints sweep” checklist on each item. |

| Distractor trap | Compelling but wrong option chosen despite partial knowledge. | Attraction to high-frequency buzzwords; early satisfaction. | Contrast drills (best vs. second-best) + decoy features catalog. |

| Premature closure | Stopped reasoning after first plausible diagnosis/mechanism. | Jumps from cue to diagnosis without testing disconfirmers. | “Prove it wrong” step + two discriminators before lock-in. |

| Computation/units | Math slip, formula mismatch, unit conversion miss. | Unrounded intermediate values, mixed units (mg vs μg). | Timed calc sprints + unit fences (write units at every step). |

| Chain break | Failure across a multi-step mechanism (A→B→C). | Can state endpoints but not the middle link. | Sketch the causal arc; rehearse aloud; fill the missing link. |

| Data interpretation | Mistaken read of graphs, ECGs, micrographs, or tables. | Label confusion, wrong axis, ignores reference ranges. | Extraction drills (title→axes→trend→outlier) before options. |

| Time-pressure gamble | Guess driven by clock rather than reasoning. | Long first pass, many marked items, late-block drop-off. | Pacing algorithm + “30-sec triage” + capped revisits. |

Keep the list short and behavioral. You’re not asking, “What topic do I not know?” You’re asking, “Which behavior caused the miss, and what drill makes that behavior rarer?” This framing lets you create reusable, interleaved practice that cuts across topics and raises the floor quickly.

Extract the Data: A 90-Minute Post-Hoc Review That Actually Changes Behavior

Set a 90-minute timer. Pull your two most recent NBMEs and last four UWorld blocks. For each missed item: (1) tag a single primary error type using the table above, (2) write a one-line “why I missed it” in verb-first language (“overlooked unit in Cr clearance; converted mg→μg wrong”), and (3) note the earliest decision point where the failure started (stem parsing, intermediate link, or option discrimination). Do not exceed 45 seconds per item; perfectionism dilutes the signal. When you’re done, rank order error types by frequency and compute per-type accuracy (correct/(correct+incorrect) for items where that type was at risk). Identify the top two error types and the worst organ system where they manifest.

Now summarize each NBME item with a three-step review script you’ll reuse all week:

- Stem → Strategy → Stats. Stem: one-sentence restatement of the clinical question (what is asked, with constraints). Strategy: the decision path you should have used (e.g., “check units → compute CrCl → compare to dosing table”). Stats: a quantitative or categorical discriminator (likelihood ratios, hallmark triad, mechanism signpost).

- Two discriminators. For every item, list two findings that separate the correct choice from the most tempting decoy.

- Prevention step. A single, observable behavior you will execute next time (“underline negations and units,” “draw A→B→C before options”).

Enter these into a compact tracker: columns for date, source (NBME/UWorld), system, task-type (pathophys/pharm/interpretation), error type, and prevention step. Create a filter view that isolates the top two error types; these become your Day 1–3 focus. Finally, capture 6–8 “anchor items”—exemplars that, once mastered, generalize widely (e.g., RAAS physiology chain, insulin pharmacokinetics, cholestatic vs hepatocellular patterns). These anchors feed the micro-drills you’ll run over the week. Your dataset is now actionable: it tells you what to drill, how to drill it, and how to verify the fix.

Master your USMLE prep with MDSteps.

Practice exactly how you’ll be tested—adaptive QBank, live CCS, and clarity from your data.

What you get

- Adaptive QBank with rationales that teach

- CCS cases with live vitals & scoring

- Progress dashboard with readiness signals

No Commitments • Free Trial • Cancel Anytime

Create your account

The Seven-Day Schedule: Daily Micro-Drills, Targeted Patches, and Verification

The schedule is simple: each day cycles through Micro-drills → Verification → Patch → Retest, capped at ~4 hours. Verification sets are timed, mixed-subject blocks (20–40q) designed to expose the targeted error type under pressure. Patches are minimal content reviews needed to run tomorrow’s drills better—not open-ended studying. Retests are short (8–12 items) and same-day to confirm behavior change. A 10–12 minute debrief closes the loop.

| Day | Primary focus | Micro-drills (60–75 min) | Verification set (timed) | Retest & deliverable |

| 1 | Error #1 (e.g., Misread) | Constraints sweep reps; negation/units underlining; 10 contrast items. | 1×20q mixed; mark only if missing info; 75s/q pace. | 8q same-type retest; prevention checklist v1. |

| 2 | Error #1 in worst system | Anchor items (system-specific); two-discriminator rehearsal. | 1×20q mixed emphasizing system; 75s/q pace. | 8q targeted; finalize checklist v2. |

| 3 | Error #2 (e.g., Distractors) | Best vs second-best contrasts; decoy feature cataloging. | 1×20q mixed; enforce forced comparison step. | 10q head-to-head contrasts; decoy notes v1. |

| 4 | Error #2 in worst system | Chain-of-reason drills; draw A→B→C before options. | 1×20q mixed; 70–75s/q; limited revisits. | 8q retest; chain sketch audit. |

| 5 | Computation/Units or Data-interp | Timed calc sprints; graph/ECG extraction protocol. | 1×20q quant/graphs heavy; show units each step. | 8q mini-OSCE style; unit fences checklist. |

| 6 | Pacing & mark-review flow | 30-sec triage practice; capped revisits; late-block stamina. | 1×40q full-length; apply pacing algorithm. | Block debrief; update pacing cutoffs. |

| 7 | Global verification | Light warm-up (8q); error-type flash review. | Fresh NBME or 2×40q mixed; exam conditions. | Score, tag errors, next-week plan. |

The cadence is deliberately narrow: two priority error types early, quantitative skills mid-week, pacing late, and a realistic verification on Day 7. If time is constrained, compress Days 3–5 into two days by blending distractor/chain drills with computation checkpoints.

Design Micro-Drills That Change Behavior in 7–10 Minutes Bursts

Micro-drills are short, repeatable tasks targeting the behavior that produces the error—not the topic wrapper. Build them as follows:

- Misread/overlook: Run a “constraints sweep” on 10 stems (no options). For each, underline negations, temporal words (acute, chronic), age, pregnancy status, and units. Then reveal options and answer. Debrief: Did the underlined constraints appear in your justification?

- Distractor traps: Create pairs of the best vs. second-best options from prior misses. For each pair, write two discriminators that make the correct choice inevitable. Drill by answering only on these pairs with a 20-second shot clock.

- Premature closure: For 8 diagnostic vignettes, force an explicit falsification step: list two findings that would refute your leading hypothesis. Only after generating disconfirmers do you select an option.

- Computation/units: 15-minute sprint: 12 dosage/renal clearance calculations with units written at every line; reject any step where units are absent. Finish with two conversions (μg↔mg, mEq↔mmol).

- Chain break: Take complex mechanisms (e.g., 21-hydroxylase deficiency → cortisol ↓ → ACTH ↑ → adrenal hyperplasia) and sketch A→B→C→D. Then practice “missing link” prompts: hide B or C and reconstruct aloud.

- Data interpretation: Use an extraction protocol: title → axes/units → normal vs abnormal bands → trend → outliers → causally relevant variable. Answer the question only after this sequence.

- Pacing: The 30-second triage: if not within a viable pathway by 30s, mark, choose a placeholder if necessary, and move. Allow each block ≤6 revisits, none under 90 seconds on the clock.

Package drills with a visible prevention checklist taped above your monitor. Each item is an observable action (“write units,” “two discriminators before lock-in,” “draw A→B→C”). Rehearsal is situational; run micro-drills right before verification sets to maximize context matching and transfer. Keep a strict cap: when the drill quality decays, stop. Short, high-fidelity reps beat long, sloppy ones.

Verification Under Pressure: A Pacing Algorithm and a Five-Pass Review

Verification sets exist to stress the new behavior and reveal if it survives time pressure. Use a simple pacing algorithm: 75 seconds per question on average, with an early buffer. Target 15 questions in the first 17–18 minutes. If behind by >90 seconds at Q20, accelerate by suppressing revisits until Q35. Cap revisits at six per block; never spend your final 90 seconds on a brand-new item.

Pair pacing with a disciplined five-pass micro-review that you apply to any item that stalls:

- Parse the ask: Re-state the question in eight words or fewer (what, who, when, units/negation).

- Anchor the pathway: Name the pathway you’re using (diagnosis-first, mechanism-first, or calculation-first). If you can’t name it, you don’t have it—mark and move.

- Chain sketch: If mechanistic, write A→B→C once. No options yet.

- Two discriminators: Identify two features that separate the best option from the most tempting decoy.

- Numerical fence: For math, write the formula with units on each term; estimate magnitude before calculating.

Mark-review flow: mark only for (a) missing one key fact that could emerge with a quick re-read, (b) simple calculation deferred, or (c) tie between two options solvable with one discriminator. Do not mark conceptual voids. On review, devote no more than 45 seconds per marked item; abandon after that ceiling and protect your final two minutes for cleanups and scan-throughs for negations/units. End each verification with a short debrief: tag two items where the drill held under pressure and one where it failed; update tomorrow’s drill menu accordingly.

Measure Effect Size and Decide What Continues, Scales, or Stops

Data discipline is what converts effort into gains. Track three metrics daily: (1) per-type accuracy (e.g., “Misread items: 5/9 → 56%”), (2) block floor (worst 10-question stretch), and (3) late-block drift (delta in accuracy between Q1–20 and Q21–40). Set decision rules that trigger changes without debate.

Continue

If a target error type improves by ≥10–12 points over 48 hours and holds under a 40q timed block, keep the drill and shrink time from 75 → 50 minutes while maintaining verification volume.

Scale

If a target error type improves by 6–9 points but remains unstable, scale by adding one contrast drill set (best vs. second-best) and push a system-specific verification block the next day.

Stop/Replace

If a drill yields <5 points improvement after two exposures, archive it. Replace with a different modality (e.g., from reading checks to chain sketches) targeting the same error type.

Rapid-Review Checklist (use nightly, 5 minutes):

- Did I run today’s prevention checklist on every question in the verification block?

- Which two discriminators most often separated right vs. decoy today?

- Where did late-block drift show up? Was it pacing or fatigue?

- What single behavior will I rehearse for 7 minutes tomorrow before the block?

- Which anchor item will I retest first (8–10 questions) to confirm retention?

On Day 7, compute pre→post delta using the same measurement frame you used on Day 1: per-type accuracy and overall block performance. If global scores are steady but error-type deltas improved, stay the course—the global score often lags by 3–7 days while behaviors consolidate.

Troubleshooting Stalls: When the Needle Doesn’t Move (Yet)

If accuracy refuses to budge, first check fidelity. Are you executing the drill as written (timer on, observable behaviors visible, options hidden during chain sketches)? Fidelity failures masquerade as plateaus. Next, examine task-type mismatch: if your verification set under-represents the targeted failure mode (e.g., few data-interpretation items), you won’t see transfer. Adjust tomorrow’s mix deliberately (add an interpretation-heavy block or a pharm-calculation cluster).

Watch for cognitive overload. If you’re attempting to fix three error types at once, you’re likely diluting practice. Re-narrow to one primary and one secondary. Similarly, over-patching content is a trap; if a behavior drill keeps failing because a micro-fact is missing, patch just that fact with retrieval practice (2 spaced reps) and return to the drill. Do not open large content chapters mid-week.

Address pacing confounders: ensure you have a break plan (hydration and micro-reset after Q20), and enforce the revisit cap. If late-block drift persists, insert a 90-second eyes-closed reset at Q23–25 during one practice block; many candidates recover 2–3 questions from this alone.

Finally, recalibrate expectations. Some improvements are masked by sampling variability in short blocks. Use confidence intervals mentally: a 40-question block has a standard error of ~√(p(1−p)/n). A move from 70% to 75% may not be “statistically” secure in one block; look for consistent direction over three exposures. If you need external signal, deploy a fresh, well-spaced NBME after Day 7 to confirm transfer under exam-like conditions. Persist with a second week only if the processes are now automated and your logs show fewer tagged errors despite similar difficulty.

Integrate Content Anchors Without Losing the Skill Focus

Error-type training breaks plateaus fastest when paired with minimal, precisely targeted content anchors. For knowledge gaps, write one-page “micro-fact sheets” that map directly onto your chain sketches. Each sheet includes: the governing principle (e.g., “aldosterone ↑ → H⁺ secretion ↑ → metabolic alkalosis”), two trap contrasts (what it is often confused with), and an anchor item ID you will retest within 24 hours. Convert key facts into retrieval prompts (cloze deletions) and schedule exactly two spaced reps: tonight and 48 hours later. Avoid third or fourth reps this week; your bandwidth belongs to verification and behavior practice.

For pharmacology, pair mechanism cartoons with dosing rules and toxicity contrasts (e.g., nonselective vs selective β-blockers; acetylcholinesterase inhibitor crisis vs myasthenic crisis). For pathology, distill pathognomonic histo/micro findings into two or three discriminators that you will actively state before selecting an option. When you patch biochem, align it with a mechanism chain to keep the mental model intact (enzyme deficiency → metabolite accumulation → clinical triad → lab hallmark). The litmus test for every patch is transfer: if you can’t see how the patch would have changed an NBME decision at the earliest failure point, it’s not a priority this week.

Integrate anchors during the Patch phase only, and keep them subordinate to drills. If you overinvest in content, your measured per-type accuracy may stall because the behavior causing misses (misreads, premature closure) remains intact. Conversely, if behavior improves but you keep hitting the same content void (e.g., renal tubular acidoses), schedule a 30-minute focused patch and immediately run eight verification items. Content and skill are not rivals; content is scaffolding for the decision behaviors you’re institutionalizing.

References & Further Reading

- Bjork R, Bjork E. Desirable difficulties in theory and practice. UCLA Bjork Learning & Forgetting Lab.

- Roediger HL, Karpicke JD. Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science (2006). Link.

- Cepeda NJ, et al. Spacing effects in learning. Psychological Bulletin (2006). Link.

- Dunlosky J, et al. Improving students’ learning with effective techniques. Psychological Science in the Public Interest (2013). Link.

- Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Academic Medicine (2003). Link.

- Kahneman D. Thinking, Fast and Slow. (2011). Concepts on System 1/2 and bias relevant to premature closure.

- Sweller J. Cognitive Load Theory. Psychology of Learning and Motivation (2011). Link.

100+ new students last month.