Why Biostatistics Logic Drives Step 1 Scoring

Step 1 increasingly tests interpretation over computation. Instead of solving long equations, you must decide whether findings are compatible with random chance, whether estimates are precise enough to act upon, and whether the study could have detected a clinically meaningful effect in the first place. Four concepts—p-values, confidence intervals (CIs), power, and sample size—form a single chain. Understanding how they fit together is far more valuable than memorizing isolated definitions. The chain runs like this: larger samples shrink the standard error; smaller standard error narrows CIs; narrow CIs increase precision, which improves the study’s power to detect plausible effects; if a real effect exists and the power is adequate, p-values tend to be small and CIs exclude the null. Every stem is a variation on this logic.

Because many students equate “statistically significant” with “important,” NBME writers love to separate the two. You may see a tiny p-value attached to a microscopic effect size that offers no bedside benefit, or a large, clinically meaningful effect that fails to reach significance because the trial recruited too few participants. High scores come from recognizing which limitation is present and articulating the correct remedy (e.g., “increase sample size” for low power versus “choose a mortality endpoint” for soft surrogates). Another common testable nuance is the difference between random error (imprecision) and systematic error (bias). Bigger samples can tame random error but do nothing to fix bias; a miscalibrated scale remains inaccurate even with infinite measurements.

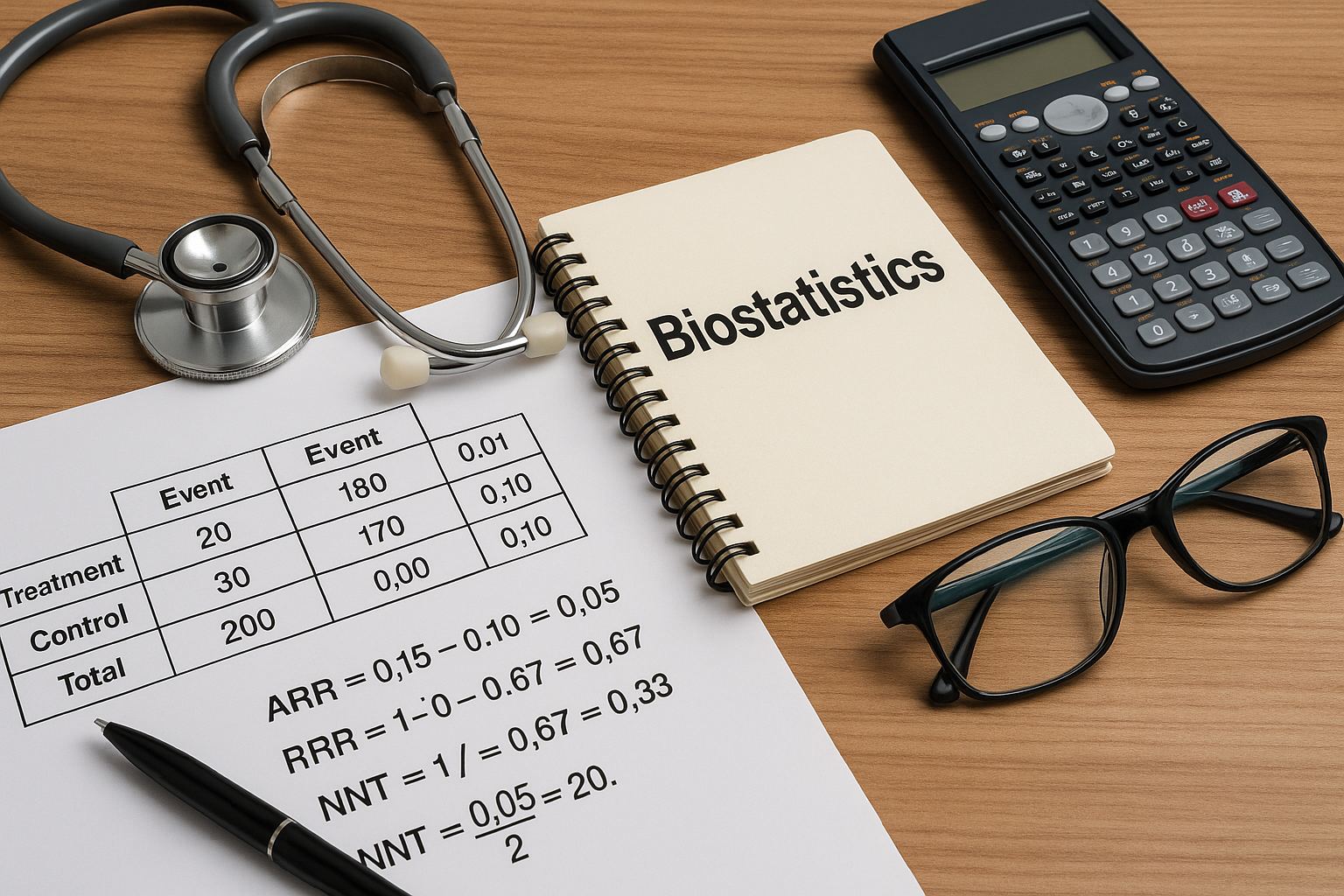

Approach each biostatistics item with a repeatable script: (1) Identify the measure (difference vs ratio). (2) Locate the null value (0 for differences, 1 for ratios). (3) Read the CI relative to the null and judge precision by its width. (4) Integrate sample size and variability to infer power. (5) Translate the statistically significant finding into clinical terms (absolute risk difference and time horizon) or, if non-significant, state the most likely reason (underpowered vs truly no effect). This disciplined workflow keeps you from being seduced by borderline p-values or dramatic relative changes divorced from baseline risk.

P-Values: Interpreting, Not Worshipping the 0.05 Line

The p-value is the probability, assuming the null hypothesis is true, of observing results as or more extreme than those seen. It is not the probability the null is true, nor the probability the results occurred “by chance alone” in a colloquial sense. On Step 1, treat p as an index of evidence against the null. Smaller p-values indicate that the data would be unusual if the null were correct. Crucially, p depends on both effect size and sample size: even minuscule effects can yield tiny p-values in huge studies, while moderate effects may miss significance in small studies.

| p-value | What it Really Says | Most Likely Correct Action in Stems |

| < 0.01 | Strong evidence against the null | Reject null; check effect size/clinical impact |

| 0.01–0.05 | Moderate evidence; result is borderline if underpowered | Interpret with CI width; consider replication |

| > 0.05 | Insufficient evidence to reject the null | Do not claim “no effect”; consider low power |

Classic traps: (1) “p = 0.06 means no difference.” Wrong—this is simply insufficient evidence at α = 0.05, and may reflect Type II error. (2) “p = 0.001 proves the treatment works.” No; it indicates incompatibility with the null under the model. Clinical significance still depends on absolute benefit and harms. (3) “Changing α after seeing the data.” This inflates Type I error and is methodologically incorrect. On test day, if a stem asks how to reduce false positives, tighten α (e.g., 0.01) or adjust for multiple comparisons; if it asks how to detect small differences, expand sample size rather than relaxing α.

Confidence Intervals: Precision, Significance, and Clinical Meaning

A 95% CI is the interval that would capture the true parameter in 95% of repeated samples, assuming the design and assumptions hold. CIs provide two things p-values cannot: direction and magnitude estimates with uncertainty. For differences (e.g., mean difference, risk difference), if the CI excludes 0, the result is statistically significant at α ≈ 0.05. For ratios (RR, OR, HR), if the CI excludes 1, the result is significant. But the width of the CI—the “precision”—is just as important. Narrow CIs suggest stable estimates; wide CIs imply imprecision, often due to small sample size or high variability.

CI Interpretation Rules

- Excludes null → statistically significant.

- Width reflects precision (n↑ or variability↓ → narrower).

- Entirely above null → harm (for RR/OR/HR) or benefit (for positive differences), context dependent.

- Includes clinically trivial effects → “statistically significant but not important.”

Practical Exam Moves

- When two treatments’ CIs overlap heavily, prefer “no clear difference.”

- Between studies with same mean effect, pick the one with the narrower CI as more precise.

- Translate significant results into absolute terms (ARR/NNT) before deciding “best therapy.”

Remember that CIs are model-based. Bias in measurement or selection corrupts the interval regardless of its width. On Step 1, if a question contrasts “larger sample” vs “improve measurement accuracy,” choose the latter when the problem is systematic (e.g., miscalibrated device), because precision without accuracy simply yields a precisely wrong answer.

Master your USMLE prep with MDSteps.

Practice exactly how you’ll be tested—adaptive QBank, live CCS, and clarity from your data.

What you get

- Adaptive QBank with rationales that teach

- CCS cases with live vitals & scoring

- Progress dashboard with readiness signals

No Commitments • Free Trial • Cancel Anytime

Create your account

Type I and Type II Errors: Balancing False Alarms and Missed Signals

Type I error (α) is rejecting a true null—false positive. Type II error (β) is failing to reject a false null—false negative. Power is 1 − β. Lowering α reduces false alarms but makes missed signals more likely unless sample size increases. In multiple comparisons (e.g., testing five outcomes), unadjusted α inflates overall Type I error; Step 1 expects you to suggest a correction (e.g., Bonferroni) or prespecify a primary endpoint.

| Error | What It Means | How to Decrease It | Trade-off |

| Type I (α) | False positive | Use smaller α, adjust for multiplicity | ↓ Power unless n increases |

| Type II (β) | False negative | Increase n, reduce variability, target larger effect | ↑ Resources/time; may alter feasibility |

Exam stems frequently ask which modification addresses a stated problem. If investigators want to detect a smaller effect, the correct lever is increase sample size (or reduce variance through better measurements). If they want to avoid spurious positives, lower α or limit comparisons. If they fear both, prespecify one primary outcome, power the study for that outcome, and relegate the rest to exploratory analysis.

Power & Sample Size: Designing Studies That Can Find Real Effects

Power is the probability that a study will correctly reject a false null. It increases with larger sample size, larger true effect, lower variability, and a more permissive α. Step 1 will not ask you to compute power, but it will test your ability to reason about these levers. The most common scenario: a non-significant result from a small trial. The safest interpretation is not “no difference,” but “insufficient evidence; may be underpowered.” Conversely, a massive trial may yield p ≪ 0.05 for an effect too small to matter clinically—precise yet trivial.

Before enrollment, researchers perform a sample size calculation specifying α, desired power (often 80–90%), expected effect size, and variability. On the exam, recognize that shrinking the detectable effect size (e.g., aiming to detect a 2 mm Hg difference rather than 5 mm Hg) requires a larger sample. If variability is high (large SD), the required sample grows; using a more reliable measurement or narrowing inclusion criteria can reduce variance and the needed n. Be alert to attrition: loss to follow-up reduces effective sample size and erodes power, sometimes differentially across arms, which also introduces bias.

Finally, connect power to confidence intervals. Low-powered studies tend to produce wide CIs that often include clinically important benefits and harms. When a stem shows a wide CI around a risk ratio that straddles 1.0, the best answer is typically to increase n or extend follow-up—actions that shrink the SE, narrow the CI, and improve the chance of detecting a true effect if it exists.

Precision vs Accuracy: Error Bars, Variability, and Bias

Precision describes the spread of repeated measurements; accuracy describes closeness to the truth. Random error (high variability) reduces precision and widens CIs; systematic error (bias) reduces accuracy and shifts estimates away from the truth. On Step 1, you’ll be asked to identify which problem dominates and to choose an appropriate fix. If repeated blood pressure measurements cluster tightly but are all 8 mm Hg too high, the device is precise but inaccurate—calibrate it. If readings scatter widely, improve protocol, training, or instrumentation to reduce variability.

Key Relationships

- SE = SD / √n → increasing n narrows CIs.

- High SD (biologic or measurement variability) → wide CIs even with decent n.

- Bigger n cannot fix bias; only better design/measurement can.

- Blinding and randomization reduce information and selection biases.

Design Implications

Choose patient-important endpoints, prespecify analyses, and apply intention-to-treat to preserve randomization. For precision-limited studies, increase n or measurement reliability; for accuracy-limited studies, address confounding or calibration—otherwise you’ll be precisely wrong.

When comparing two reports with the same point estimate, pick the one with the narrower CI as more precise. When one report uses a biased method (e.g., retrospective self-report), prefer the prospectively collected, blinded assessment even if its CIs are slightly wider—the latter is more accurate and decision-relevant.

Rapid-Review Checklist & High-Yield Pitfalls

- P-value meaning: P(Data | Null). It is not P(Null | Data).

- CI logic: Excludes null → statistically significant; width = precision.

- Power: 1 − β. Increase by n↑, effect size↑, variability↓, or α↑.

- Sample size: Detecting smaller effects requires larger n.

- Statistical vs clinical: Small p may still be trivial; convert to ARR/NNT when relevant.

- Multiple testing: Without adjustment, α inflates → more Type I errors.

- Bias vs imprecision: Bigger n fixes imprecision, never bias.

- Borderline results: p ≈ 0.05 + wide CI → replicate or increase n.

- Loss to follow-up: Reduces effective n and may bias estimates.

- Person-importance: Prefer mortality/morbidity endpoints over surrogates when judging significance.

If the stem says…

- “Results non-significant; small sample, high SD” → Underpowered; increase n or reduce variability.

- “Very small p in large cohort; trivial effect size” → Statistically significant, clinically irrelevant.

- “Wide CI crossing 1 for RR” → Imprecise; insufficient evidence.

Mental Flow (10 seconds)

- Identify measure type and null value.

- Check CI vs null for significance.

- Judge CI width → precision.

- Infer power from n and SD.

- Translate to clinical impact or state “insufficient evidence.”

Training These Skills with MDSteps (and Making Them Automatic)

Targeted repetition cements interpretation skills. In the MDSteps Adaptive QBank (9,000+ questions), filter for tags like “biostatistics,” “study design,” and “evidence interpretation.” For each item, write a one-line explanation in the form: “Because CI [excludes/includes] the null and is [narrow/wide], the result is [significant/uncertain]; power is likely [adequate/low] due to [n/SD].” Our AI Tutor can rephrase stems to stress different levers (α, β, n, SD) until you can predict the effect on p and CI without calculating.

Convert misses into memory assets with the automatic flashcard deck from your errors and export to Anki for spaced retrieval. Build micro-drills: (1) Present a CI and ask whether it’s significant, (2) Modify n by 4× and predict the new CI width qualitatively (SE scales as 1/√n), (3) Ask what design change fixes the stated problem (bias vs imprecision). In the Analytics & Readiness Dashboard, watch your accuracy and median time for interpretation items drop below 60 seconds—an indicator that this logic has become reflexive.

Finally, cross-link to clinically meaningful metrics. When ratios are significant, translate them into absolute terms using the baseline risk provided in the stem. This forces you to ask, “Is the statistically significant result big enough to matter to a patient?” That habit prevents overcalling trivial wins and undercalling truly important effects—exactly the kind of disciplined reasoning Step 1 rewards.

Suggested citation style: “Biostatistics on Step 1: P-Values, Confidence Intervals, Power & Sample Size.” MDSteps Blog. Accessed {{today}}.

100+ new students last month.