Why Biostatistics of Risk Matters More Than Raw Significance

Step 1 increasingly rewards your ability to translate a study’s result into clinical utility. That means moving beyond “p < 0.05” toward measures that tell a clinician and a patient what actually changes at the bedside. Absolute risk, absolute and relative risk reductions (ARR and RRR), and the number needed to treat/harm (NNT/NNH) compress complex trial outputs into intuitive quantities: how many patients benefit (or are harmed) over a defined time horizon. These are the metrics that drive guideline tables and informed consent, and they appear in vignettes where a resident presents a risk difference, a relative risk, or a Kaplan–Meier estimate and asks what to do next.

Absolute risk is simply the event rate in a group. Risk difference (also called absolute risk reduction when favorable) is the subtraction of event rates between control and treatment. Relative risk compares those risks as a ratio; from it, RRR = 1 − RR when treatment is better, or relative risk increase when harm rises. NNT = 1/ARR (rounded up) captures “how many to treat to prevent one event,” while NNH = 1/absolute risk increase (ARI) captures “how many to treat for one additional adverse event.” These values are time-bound (e.g., “NNT over 3 years”) and baseline-risk dependent: the same relative effect can produce dramatically different absolute effects across low- vs high-risk populations, a favorite NBME subtlety.

On exam day, stems often bury the lead with relative measures that sound large but translate to tiny absolutes. A “50% reduction” in a rare outcome can be clinically unremarkable if the baseline risk is minuscule; conversely, a modest relative reduction in a common outcome may produce a striking NNT. The test also probes whether you can distinguish real outcome improvement (fewer deaths, strokes) from merely earlier detection (lead-time or overdiagnosis). The antidote is a consistent workflow: (1) extract event counts or risks, (2) compute ARR or ARI, (3) invert for NNT/NNH, (4) anchor interpretation to baseline risk and time horizon, and (5) sanity-check units (per 100, per person-year) and denominators. Mastering this translation makes you resilient to framing biases and unlocks quick, defensible answers.

From 2×2 Table to ARR/RRR/NNT: A Fast, Error-Proof Workflow

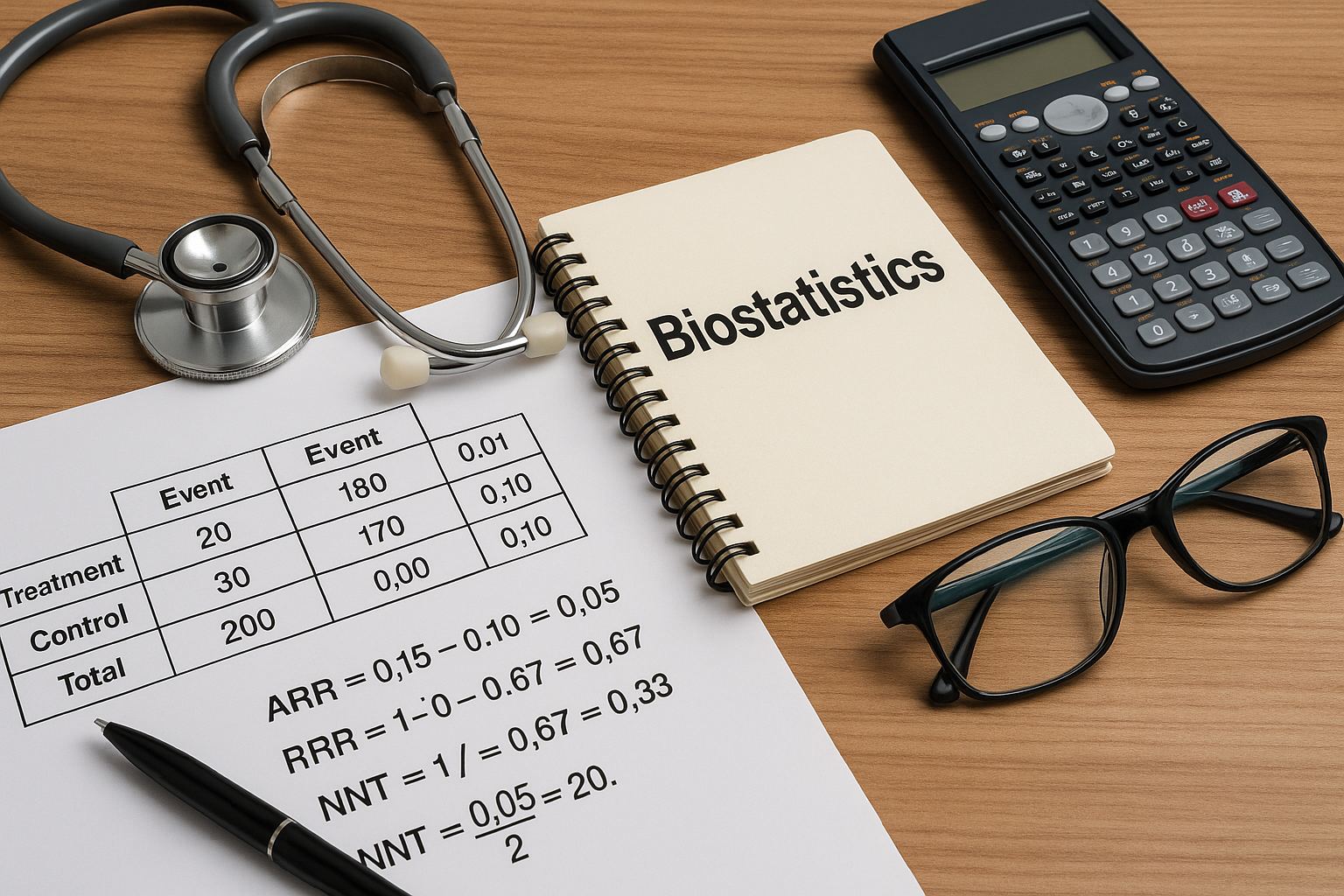

Nearly every ARR/RRR/NNT question can be reduced to a 2×2. Start by labeling rows as Treatment vs Control and columns as Event vs No Event. Compute the risk in each arm as events divided by total in that arm. Then compute ARR = Riskcontrol − Risktreatment when treatment helps (if negative, that’s ARI). The RR = Risktreatment/Riskcontrol, and RRR = 1 − RR. Finally, NNT = 1/ARR (round up), NNH = 1/ARI (round up). Keep decimals as proportions (e.g., 0.10 not 10%) until the final step to avoid mistakes.

| Group | Event | No Event | Total | Risk (event/total) |

| Treatment | 20 | 180 | 200 | 0.10 |

| Control | 30 | 170 | 200 | 0.15 |

| ARR = 0.15 − 0.10 = 0.05 (5 percentage points); RR = 0.10/0.15 = 0.67; RRR = 1 − 0.67 = 0.33 (33%); NNT = 1/0.05 = 20. |

Watch for three traps. Trap 1: wrong denominator. Step 1 sometimes gives you events out of the combined sample or per 1,000 person-years. Always re-anchor to each arm’s denominator. Trap 2: rounding direction. NNT and NNH always round up (ceiling), even when the decimal is small (e.g., 5.01 → 6). Trap 3: choosing ARR vs RRR. Clinical relevance rides on ARR/NNT, not RRR alone. When the question asks “Which intervention yields the greatest clinical benefit?” compare absolute risk reductions across options.

Speed Workflow

- Compute treatment and control risks.

- Risk difference → ARR (or ARI).

- Invert for NNT (or NNH) and round up.

- Contextualize with baseline risk & time horizon.

Unit Sanity Check

- Percent vs proportion (e.g., 5% vs 0.05).

- Per person-years vs fixed follow-up.

- Same time horizon across arms/interventions.

Absolute vs Relative Framing: Baseline Risk Drives Clinical Impact

Relative measures are seductive because they look big in headlines, but absolute differences determine real-world benefit. Consider two trials of the same drug. In a low-risk population with a 2% annual event rate, an RR of 0.75 produces an ARR of 0.5% (NNT ≈ 200). In a high-risk subgroup with a 20% rate, the same RR yields an ARR of 5% (NNT = 20). The relative effect is identical, but clinical utility changes by an order of magnitude. Step 1 frequently embeds this contrast in vignettes where the “best therapy” for one patient may not be the best for another due to different baseline risks.

Two corollaries follow. First, treat the patient in the stem, not the average trial participant. If you’re given age, comorbidity, or biomarker clues that imply high baseline risk, expect a larger ARR/NNT benefit from the same RR. Second, interpret harm the same way. Adverse effects scale with baseline risk too. A small ARI in a frail group might produce an unfavorable NNH that outweighs the NNT benefit; that trade-off is testable when stems ask whether to start or stop prevention.

Relative framing also fuels cognitive errors. “A 40% reduction” can mask a trivial absolute effect in rare outcomes (e.g., 0.1% → 0.06%: ARR 0.04%, NNT 2,500). Conversely, modest relative changes in common outcomes create meaningful NNTs. The exam’s remedy is simple: convert to absolute terms first, then compare. When multiple therapies are listed, compute (or approximate) ARR for each. If exact numbers aren’t provided, recall that larger baseline risk × similar RR → larger ARR → smaller NNT. When confidence intervals are shown, check whether the CI for the RR crosses 1.0 (no effect). If not, and absolute risks are given, propagate the extremes to get a sense of NNT stability. This frames not only “which option benefits most” but also “how certain is that benefit.”

Master your USMLE prep with MDSteps.

Practice exactly how you’ll be tested—adaptive QBank, live CCS, and clarity from your data.

What you get

- Adaptive QBank with rationales that teach

- CCS cases with live vitals & scoring

- Progress dashboard with readiness signals

No Commitments • Free Trial • Cancel Anytime

Create your account

Screening Questions: When ARR Is Real vs Illusory (Lead-Time, Length-Time, Overdiagnosis)

Screening vignettes test whether you can separate earlier detection from better outcomes. A classic trap: a new screening test “increases 5-year survival” but doesn’t reduce disease-specific mortality. Survival goes up because of lead-time bias (diagnosis made earlier, clock starts sooner), not because fewer people die. Similarly, length-time bias makes a test appear effective by preferentially detecting slower-growing disease with better prognosis. Overdiagnosis inflates incidence by labeling indolent lesions as “cancer,” boosting survival statistics without changing mortality. On Step 1, the right answer often hinges on choosing a mortality-based endpoint or looking for a true ARR in bad outcomes (deaths, strokes) rather than improved detection metrics.

| Bias | Clue in Stem | What Looks Better | What Actually Proves Benefit | Mitigation |

| Lead-time | Earlier diagnosis, unchanged mortality | Survival duration | Lower disease-specific mortality (ARR) | Use mortality endpoints; randomized rollout |

| Length-time | Screening detects milder cases | Better outcomes among screened | RCT comparing mortality | Randomize invite to screen |

| Overdiagnosis | Incidence up, mortality flat | Apparent improved survival | Stable or improved mortality | Track hard endpoints; longer follow-up |

When a stem asks whether to recommend screening, compute or reason through ARR in bad outcomes, not detection rates. If two programs have similar sensitivity/specificity, prioritize the one with evidence that reduces mortality or major morbidity. If you’re only given relative measures (e.g., HR or RR), recover the absolute impact by applying them to the stated baseline risk of the population. Finally, demand an appropriate time horizon—some benefits require years to accrue, and insufficient follow-up can hide or exaggerate ARR. The exam’s “best next step” often rewards demanding mortality endpoints and recognizing when a “benefit” is just a statistical mirage.

Design & Time Horizon: When NNT/NNH Is Stable—and When It Isn’t

ARR and NNT live inside a study’s design and follow-up window. In randomized controlled trials, intention-to-treat (ITT) preserves randomization and yields conservative, decision-relevant estimates; per-protocol analyses can exaggerate benefit by excluding non-adherers. In cohort studies, confounding threatens ARR estimates—patients who choose therapy differ in baseline risk. Step 1 won’t ask you to compute propensity scores, but it will ask whether randomization (or stratification) is the best way to handle confounding; it is.

Time horizon matters. NNT shrinks over longer follow-up when benefits accumulate (e.g., statins over years), but harms may appear earlier (e.g., drug-related rash). Stems may provide person-year incidence; in that case, convert rates to risks over the specified period or compare rate differences directly. If the event is rare and follow-up short, ARR may be small and NNT large; extending follow-up can reveal substantial benefit. Conversely, competing risks (patients die from other causes first) can dilute ARR in elderly or multimorbid groups.

Two additional wrinkles emerge. First, censoring and loss to follow-up create uncertainty: if more high-risk patients drop out from one arm, ARR can be biased. Check for balanced dropout or use ITT to anchor interpretation. Second, composite endpoints inflate event rates and can make NNT look better while hiding that only “soft” outcomes moved. Step 1 sometimes asks which endpoint is most appropriate; prefer patient-important outcomes (death, MI, stroke) over surrogate markers unless the surrogate has validated linkage to outcomes.

Bottom line for the exam: when asked to compare interventions, favor the one with lower absolute risk of bad outcomes over a clinically sensible timeframe, measured within a randomized design using ITT. When the question hinges on calculation, compute ARR/NNT precisely; when it hinges on judgment, interrogate the time horizon, endpoint quality, and internal validity signals that make NNT believable.

Uncertainty & CIs for ARR and NNT: Reading the Fine Print

ARR, like any estimate, has sampling variability. If the study reports a confidence interval (CI) for the risk difference, you can infer the CI for NNT by inverting the limits (being mindful of sign). For example, ARR = 0.05 with 95% CI 0.02 to 0.08 implies NNT point estimate 20 and a plausible range from 1/0.08 = 12.5 (→ 13) to 1/0.02 = 50. Wide ARR CIs yield unstable NNTs—an NBME-friendly subtlety when choices contrast a “dramatic NNT” from a tiny trial versus a “modest NNT” from a large, precise trial.

When ARR CIs include 0, the effect may be statistically non-significant, and NNT becomes undefined or can flip sign (becoming NNH). On Step 1, the safest interpretation is: “Evidence is insufficient to conclude a benefit; do not recommend solely on these data.” If only RR and its CI are given, check whether the CI crosses 1.0. If not, and baseline risks are provided, you can approximate ARR by applying the RR extremes to the control risk and subtracting from control risk. This estimates how stable the NNT is across plausible effects.

Another consideration is heterogeneity. A single overall ARR can mask subgroup differences (effect modification). If the stem hints at a biologically plausible modifier (e.g., smoking status, LDL level), it’s reasonable to expect different ARRs across strata even when the RR is similar. Finally, recognize that “fragile” results—where very few outcome flips would reverse significance—often correspond to wide ARRs and volatile NNTs. While Step 1 won’t ask you to compute a fragility index, it may ask which study is more reliable: choose the one with narrower CIs around clinically important outcomes.

Practical CI Rules

- ARR CI excludes 0 → benefit is statistically supported; invert ends for NNT range.

- RR/HR CI excludes 1.0 → effect likely present; translate to absolute terms before deciding.

- Prefer precise, patient-important outcomes over impressive but imprecise estimates.

Mental Math & Shortcuts: Getting to the Right NNT Under the Clock

Speed comes from a few repeatable approximations. First, if the event counts are multiples of 10 or 100, convert to percentages immediately (e.g., 8/200 = 4%). Second, when RRs are provided without absolute risks, apply the RR to the stated or implied control risk to recover the treated risk, then subtract. If control risk is 10% and RR = 0.7, treated risk ≈ 7%, ARR ≈ 3%, NNT ≈ 33. Third, when only odds ratios (ORs) are reported and the outcome is rare (<10%), OR ≈ RR. Otherwise, don’t convert OR to RR on Step 1 unless the stem provides the necessary baseline risk.

Here are quick patterns to memorize. ARR 10% → NNT 10. ARR 5% → NNT 20. ARR 2% → NNT 50. ARR 1% → NNT 100. Having these anchors lets you sanity-check calculations. For harms, use the same anchors for NNH (with ARI instead of ARR). Always round up (toward no overstatement of benefit or harm).

| Given | Approximate Conversion | Fast Answer |

| Control 12%, RR 0.75 | Treat ≈ 9% → ARR ≈ 3% | NNT ≈ 34 (round up) |

| Control 30%, RR 0.9 | Treat ≈ 27% → ARR ≈ 3% | NNT ≈ 34 |

| Control 4%, RR 0.5 | Treat ≈ 2% → ARR ≈ 2% | NNT ≈ 50 |

Finally, when forced to choose between multiple interventions with incomplete data, default to the option with the largest absolute reduction in bad outcomes over a credible timeframe, especially when backed by randomization. If two options have similar ARR but one has a much worse ARI for serious adverse events, the NNH may tilt you away from it. Step 1 often hides the correct choice behind this benefit–harm balance rather than a single flashy statistic.

Rapid-Review Checklist & High-Yield Pitfalls

- Compute risks first. Event/total for each arm. Keep as proportions until the final step.

- ARR, not just RRR. Clinical relevance tracks ARR → NNT/NNH. Round NNT/NNH up.

- Baseline risk rules. Same RR in a high-risk group → larger ARR → smaller NNT.

- Time horizon matters. Quote or infer the follow-up window for any ARR/NNT.

- Endpoints, not detection. Mortality/morbidity reductions prove benefit; survival and incidence can mislead.

- Beware person-years. Convert rates to risks for the same window before computing ARR.

- CI sanity. ARR CI that crosses 0 → NNT unstable/undefined; avoid strong claims.

- Composite traps. Big “wins” driven by soft outcomes inflate ARR artificially.

- OR ≈ RR only if rare. Otherwise avoid OR→RR shortcuts without baseline risk.

- Decision rule. Prefer randomized, ITT-based estimates with patient-important endpoints.

If the stem says…

- “Survival improved, mortality unchanged” → Think lead-time/overdiagnosis.

- “RR identical across strata, baseline risk differs” → ARR differs; choose higher-risk group for more benefit.

- “Per-protocol shows big effect, ITT smaller” → Trust ITT for decisions.

ARR ↔ NNT Anchors

10% → 10; 5% → 20; 2% → 50; 1% → 100. Use to check arithmetic and plausibility.

Train the Skill: How to Practice ARR/NNT the Smart Way

Skill builds from targeted reps. In MDSteps’ Adaptive QBank (9,000+ board-style questions), flag every item that mentions event counts, RRs/HRs, or screening outcomes. For each, rewrite the stem as a 2×2, compute risks, ARR/ARI, and NNT/NNH, and add a one-line clinical translation (“Treat 20 patients for 3 years to prevent one MI”). Use the automatic flashcard deck from misses to store your worked 2×2 and the final takeaway; export to Anki to interleave spaced recall. The AI Tutor can challenge you to convert relative measures to absolute terms and to explain which endpoint proves benefit.

Next, build micro-drills: set a timer for 5 minutes and solve three ARR/NNT conversions from scratch. Then, practice interpretation drills: for a given baseline risk (2%, 10%, 20%), and fixed RR (0.7), compute ARR and NNT; notice how the “same drug” looks different as baseline risk changes. In the analytics and readiness dashboard, track accuracy and time on biostats items; aim for <60 seconds per ARR/NNT task with ≥85% accuracy.

Finally, rehearse screening logic without numbers. Ask: “Does this test lower mortality or just find disease earlier?” Tie your answer to a mortality ARR when provided. On the exam, write mini-checkmarks on your scratch paper: “risks → ARR → NNT → endpoint → horizon → CI.” It keeps you honest when the stem is noisy. Over time, this workflow becomes automatic, so when an NBME vignette throws rates, odds, or medians at you, you can still land on the clinical metric that matters.

Suggested citation style: “Biostatistics in Clinical Decisions: Absolute Risk, ARR/RRR, NNT/NNH on Step 1.” MDSteps Blog. Accessed {{today}}.

100+ new students last month.